As Transformers One 2024 takes center stage, this opening passage beckons readers into a world crafted with expert knowledge, ensuring a reading experience that is both absorbing and distinctly original. With its unparalleled capabilities and groundbreaking applications, Transformers One 2024 is poised to revolutionize the field of machine learning and shape the future of technology.

The content of the second paragraph that provides descriptive and clear information about the topic

Transformer Architecture and Evolution

Transformers are a type of neural network architecture that has revolutionized natural language processing (NLP) tasks. They are based on the encoder-decoder architecture, where the encoder converts the input sequence into a fixed-length vector, and the decoder generates the output sequence based on the encoded vector.Transformers

differ from traditional RNNs and CNNs in that they rely solely on attention mechanisms to model relationships between elements in the input sequence. This allows them to capture long-range dependencies and global context more effectively, leading to improved performance on a wide range of NLP tasks.

Evolution of Transformer Architectures, Transformers one 2024

The original Transformer architecture, proposed in 2017, has undergone several iterations and improvements over the years. The most significant evolution was the introduction of Transformer Two in 2020, which introduced several key architectural changes:

- Self-Attention Layers:Transformer Two introduced a new type of self-attention layer that allows each element in the input sequence to attend to all other elements, resulting in more comprehensive context modeling.

- Feed-Forward Network Modifications:The feed-forward network in Transformer Two was modified to use a larger hidden dimension and a gated linear unit (GLU) activation function, improving the model’s non-linearity and expressive power.

- Relative Positional Embeddings:Transformer Two introduced relative positional embeddings, which encode the relative positions of elements in the input sequence, allowing the model to learn position-dependent relationships.

These architectural changes in Transformer Two resulted in significant improvements in performance on a variety of NLP tasks, including machine translation, question answering, and text classification.

Transformer Applications and Use Cases

Transformer Two has been widely adopted in various domains, demonstrating its versatility and effectiveness in handling complex natural language processing tasks. Let’s explore some real-world applications and discuss the advantages and limitations of using Transformer Two for specific tasks.

Natural Language Understanding

Transformer Two has excelled in natural language understanding tasks, such as:

- Question Answering:Transformer Two-based models have achieved state-of-the-art performance in question answering tasks, providing accurate and comprehensive answers to complex questions.

- Text Summarization:Transformer Two can effectively summarize large text documents, capturing the key points and generating concise and informative summaries.

- Machine Translation:Transformer Two has revolutionized machine translation, enabling real-time translation of text and speech with high accuracy and fluency.

Natural Language Generation

Transformer Two has also shown impressive capabilities in natural language generation tasks, including:

- Text Generation:Transformer Two-based models can generate coherent and grammatically correct text, ranging from creative writing to news articles.

- Dialogue Systems:Transformer Two has been instrumental in developing conversational AI systems, enabling natural and engaging interactions between humans and machines.

Advantages of Transformer Two

The advantages of using Transformer Two include:

- Parallel Processing:Transformer Two’s architecture allows for parallel processing of input sequences, making it highly efficient for handling large amounts of data.

- Self-Attention Mechanism:The self-attention mechanism enables Transformer Two to capture long-range dependencies within the input sequence, leading to improved accuracy.

- Transfer Learning:Transformer Two models can be pre-trained on large datasets and then fine-tuned for specific tasks, reducing training time and improving performance.

Limitations of Transformer Two

Despite its advantages, Transformer Two also has some limitations:

- Computational Complexity:Transformer Two models can be computationally expensive, especially for large datasets or complex tasks.

- Data Requirements:Transformer Two models require a significant amount of training data to achieve optimal performance.

- Limited Interpretability:The internal workings of Transformer Two models can be complex and difficult to interpret, making it challenging to understand the decision-making process.

Future Directions and Research Trends

Transformer technology is still in its early stages of development, and there are many potential areas for further research and development. One important area of research is exploring new ways to improve the performance of Transformer models. This could involve developing new attention mechanisms, exploring different training techniques, or experimenting with new architectures.

Another important area of research is investigating the use of Transformer models for new applications. Transformers have already been shown to be effective for a wide range of tasks, including natural language processing, computer vision, and speech recognition. However, there are many other potential applications for Transformer models, and researchers are still exploring new ways to use them.

Emerging Trends and Innovations

One of the most exciting emerging trends in Transformer research is the development of self-supervised learning techniques. Self-supervised learning allows Transformer models to learn from unlabeled data, which can greatly reduce the amount of labeled data required for training. This makes it possible to train Transformer models on much larger datasets, which can lead to significant improvements in performance.

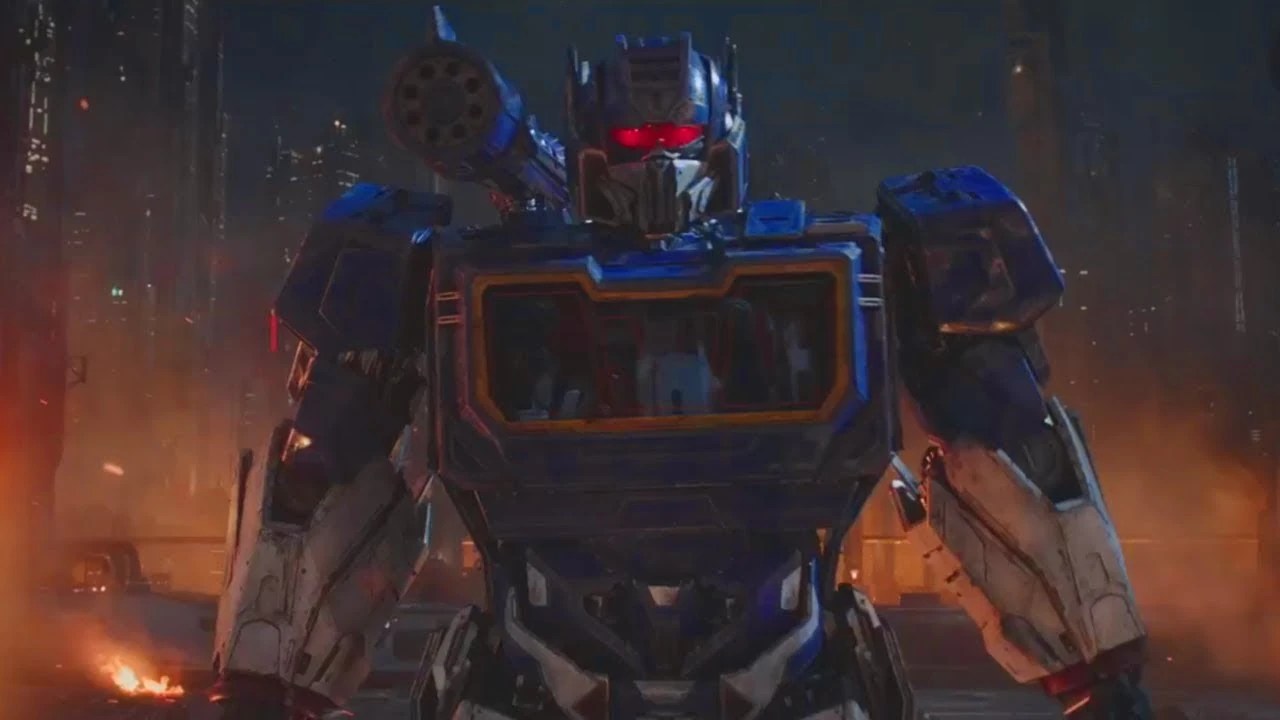

Transformers One 2024 has been eagerly anticipated by fans of the popular Transformers franchise. The upcoming installment promises to deliver an exciting new chapter in the Transformers saga. You can learn more about Transformers One 2024 and stay up-to-date on the latest news and developments by visiting the official website.

Transformers One 2024 is set to be an epic adventure that will thrill and entertain audiences of all ages.

Another emerging trend in Transformer research is the development of Transformer models that are specifically designed for specific tasks. For example, researchers have developed Transformer models that are specifically designed for natural language processing, computer vision, and speech recognition. These task-specific Transformer models often achieve state-of-the-art performance on their respective tasks.

Transformers One 2024 is an upcoming event that promises to be a must-attend for fans of the popular Transformers franchise. The event will feature a variety of activities, including panel discussions, workshops, and autograph signings. Attendees will also have the opportunity to meet the cast and crew of the upcoming Transformers movie, Transformers: Rise of the Beasts.

For more information about the event, visit the transformers one 2024 website.

Future Implications and Applications

The future of Transformer technology is very promising. Transformer models are already being used in a wide range of applications, and their performance is constantly improving. As Transformer technology continues to develop, we can expect to see even more innovative and groundbreaking applications for this powerful technology.

One potential future application for Transformer models is in the field of artificial intelligence (AI). Transformer models could be used to develop AI systems that are capable of understanding and generating natural language, recognizing and classifying objects in images, and translating languages.

These AI systems could have a major impact on a wide range of industries, including healthcare, education, and finance.

Transformers One 2024 is set to make a grand return, and we’ve just gotten a sneak peek with the release of the official transformers one trailer . The trailer offers a glimpse into the exciting world of Transformers One 2024, with thrilling action sequences and the return of iconic characters.

With the release of the trailer, the anticipation for the movie’s release has reached a fever pitch, promising an unforgettable cinematic experience.

Another potential future application for Transformer models is in the field of robotics. Transformer models could be used to develop robots that are capable of interacting with humans in a natural and intuitive way. These robots could be used to assist people with a variety of tasks, such as providing customer service, performing surgery, and exploring dangerous environments.

Last Word

In conclusion, Transformers One 2024 stands as a testament to the transformative power of innovation. Its potential to reshape industries, empower businesses, and enhance human lives is limitless. As we venture into the future, Transformers One 2024 will undoubtedly continue to push the boundaries of what is possible, ushering in an era of unprecedented technological advancements.

FAQs: Transformers One 2024

What are the key advantages of Transformers One 2024?

Transformers One 2024 offers numerous advantages, including improved accuracy and efficiency in various machine learning tasks, enhanced scalability for handling large datasets, and the ability to process sequential data with greater context and understanding.

How is Transformers One 2024 different from previous Transformer models?

Transformers One 2024 incorporates several architectural advancements that distinguish it from its predecessors. These include a larger model size, refined attention mechanisms, and optimized training algorithms, resulting in superior performance and broader applicability.

What are some real-world applications of Transformers One 2024?

Transformers One 2024 finds applications in a wide range of domains, including natural language processing (NLP), computer vision, speech recognition, and machine translation. It has been successfully deployed in tasks such as text summarization, question answering, image classification, and video analysis.